local.ai Reviews: Use Cases & Alternatives

What is local.ai?

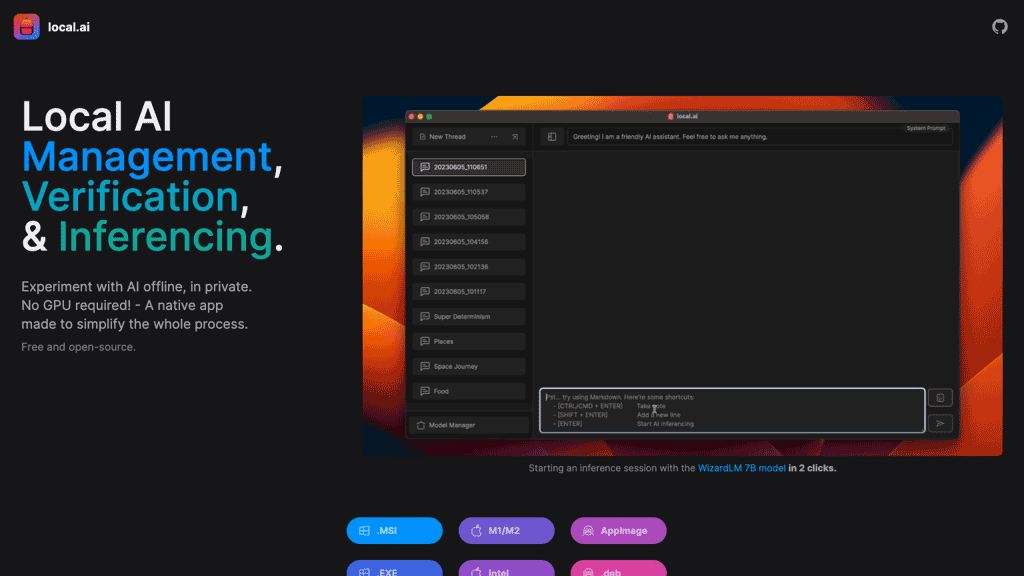

Local AI Playground by Local.ai is the go-to tool for AI management, verification, and inferencing needs.This native app simplifies the entire process, allowing users to experiment with AI offline and in private.

With GPU requirement, this free open-source tool supports browser tags, making it incredibly versatile.The tool comes with features like CPU inferencing, adaptability to available threads, and memory efficiency in a compact size of less than 10 MB for Mac M2, Windows, and Linux.

The upcoming GPU inferencing and parallel session management features will further enhance the user experience.In addition, Local AI Playground also offers digest verification for model integrity and a powerful inferencing server for quick and seamless AI inferencing.

AI Categories: local.ai,Developer tools,LLM,AI tool

Key Features:

Local AI Playground for AI models management, and inferencing

Core features

Ai researchers

Use case ideas

Summary

Local AI Playground by Local.ai is an innovative offline AI management tool. It features CPU inference, memory optimization, upcoming GPU support, browser compatibility, small footprint, and model authenticity assurance for versatile experimental use.

Q&A

Q:What can local.ai do in brief?

A:Local AI Playground by Local.ai is an innovative offline AI management tool. It features CPU inference, memory optimization, upcoming GPU support, browser compatibility, small footprint, and model authenticity assurance for versatile experimental use.

Q:How can I get started with local.ai?

A:Getting started with local.ai is easy! Simply visit the official website and sign up for an account to start.

Q:Can I use local.ai for free?

A:local.ai uses a Free pricing model

, meaning there is a free tier along with other options.

Q:Who is local.ai for?

A:The typical users of local.ai include:

- Ai researchers

- Machine learning engineers

- Data scientists

- Students learning ai

- Anyone interested in ai experimentation

Q:Does local.ai have an API?

A:Yes, local.ai provides an API that developers can use to integrate its AI capabilities into their own applications.

Q:Where can I find local.ai on social media?

A:Follow local.ai on social media to stay updated with the latest news and features:

Q:How popular is local.ai?

A:local.ai enjoys a popularity rating of 4.79/10 on our platform as of today compared to other tools.

It receives an estimated average of 28.5K visits per month, indicating interest and engagement among users.